ISSN: 1204-5357

ISSN: 1204-5357

AMAL NAIN CHOPRA

Applied Physics Department, Maharaja Agrasen Institute of Technology, GGSIP University, New Delhi, India

Visit for more related articles at Journal of Internet Banking and Commerce

The studies related to the concepts and applications of Artificial Intelligence and some important coevolving modern issues on Management of organizations and jobs have been reviewed and discussed. The concepts of Artificial Intelligence with reference to the efficient management like: neural networks, Theoretical approach of artificial intelligence based on quantum computing have been explained by discussing spin on the basis of qubits. Simulation for optimization of learning in organization, and Management of organization has been presented and discussed. The paper is expected to be useful not only for the students, teachers, and researchers in Business Management Studies; but also for the top Management executives engaged in tackling the

Business Management; Artificial Intelligence; Neural Networks; Quantum Computing; Simulation for Optimization of Learning in Organization

In view of the very complicated problems faced in the companies and the increasingly complex corporate world, it is being felt that the novel techniques based on Neural Networks, and Simulation related to Artificial Intelligence are necessary to be employed for smooth and efficient functioning of the companies and the corporate world. Artificial Intelligence (AI) does not mean predictions or forecasts. In fact, it refers to a detailed analysis of (i) the trajectory of the fields, and (ii) the trends and the technical needs, which is based on achieving the required useful AI.

It must be noted that all the machine learning is not applied to artificial intelligences. Actually, its aim is to achieve human and super- human abilities in machines, which can be used for routine and special purposes, including Automatic vehicles, smart homes, and artificial assistants. Other common examples in daily life are: surveillance drones, robot-assistants for mobile devices, and full-time assistants capable of hearing and seeing. It is really interesting to note that the researchers are engaged in developing a fully autonomous synthetic entity, capable of behaving and of giving human level performance or even better for routine tasks.

Very common example of AI is Software, which is understood as neural networks architectures, trained in a manner with an optimization algorithm for solving a specific task e.g. profit maximization by optimization of costs, resources, and number of employees in a company. Interestingly, new neural networks act as the de-facto tool for learning to solve the assigned tasks involving the learning of supervising for categorizing from a large sized dataset.

Artificial Intelligence in Management

It is now well recognized that the artificial intelligence is going to have a great influence on the management of organizations and jobs in near future, which has led to great interest of the researchers in this field. In fact it is evident from the MIT Sloan Management Review’s website [1], that this upcoming topic has started drawing the attention and interest. In addition, this has been observed to be the most popular in the journals magazines, reports, and the most sought after blog posts.

It is important to realize that along with AI, some of the coevolving timely issues, that have been the subject of interest are: digital transformation, design thinking, innovation, strategy execution, problem formulation, predictive analytics, and most importantly negative emotions in the workplace. The combined impact of these factors has resulted in the researchers in the top Management institutes including MIT (USA), Stanford (USA), Oxford (UK), Rotman School of Management(Toronto, Canada), and IIMS (India) choosing these topics for their research purposes.

A comprehensive study by one of the leading players in the field of management studies - Accenture has been able to predict that the implementation of AI will certainly result in the creation of many noble types of jobs that will help in the systematic and efficient management of organizations for improving their performance and also their outputs and profits.

One of the very important findings of the MIT Sloan Management Review report [1], on data and analytics, sponsored by SAS, is that the percentage of companies gaining competitive advantage by using analytics has interestingly increased, though only for the first time in four years, indicating that the technique has now been applied and utilized more methodically and systematically; which augurs well for Commerce and Industry. It is also important to note that the Big Data Technique, just like artificial intelligence is very powerful and efficient tool for Management Practices. So now, interest has been growing in the combined application of the two with very good results. In fact, the Big Data Technique has been able to make a powerful combination of AI and Machine language. It has been explained and emphasized by the three professors from the University of Toronto’s Rotman School of Management, working on artificial intelligence, that the advances in artificial intelligence are expected to substantially change the workplace, in addition to the job, and research experience of the managers. It is interesting to note that the Boston Consulting Group has presented a realistic baseline, which can guide the companies to compare their AI aims and efforts with those of competing companies.

It is now becoming clear that the corporate world is disrupting, and hence the companies have to find ways of surviving in addition to thriving. As explained by the well-known Management expert - MIT Sloan professor Erik Brynjolfsson, the problem faced by us today is not a world without work, but a world with rapidly changing work. This requires the managers to keep on their toes, and also to keep on taking newer steps in order to constantly changing companies.

Neural Networks

It is to be understood that though the Neural network architectures have the advantage of learning the parameters of an algorithms automatically and systematically from data, and much superior to hand-crafted features, but the training to solve a specific task by learning from data, has still to be designed by hand. In fact, it is still the limitation of this field that these are still crafted by the hand managers with lot of experience. Though research in this direction is being carried out at present, still much more is needed to be done to reach a good level in such jobs. It is important to note that though the Neural network architectures are indeed the fundamental tool of learning algorithms, capable of mastering a new task, yet it is able to do so only when the neural network is completely correct. Presently, the problem of learning neural network architecture from data is based on experimenting with multiple architectures on a large dataset, which is quite tedious and time consuming. This problem being serious and important needs the attention of dedicated researchers. In case of supervised learning, we have to offer help at every instance by making the workers to perform correctly and efficiently.

Brenden et al. [2], have pointed out and discussed as to how the recent progress in artificial intelligence has resulted in creating interest in building systems that can learn and think like human beings. They have explained that many advances have come from using deep neural networks trained end-to-end in tasks like object recognition, video games, and board games, achieving performance that is equal to or even beats that of humans in certain respects. In spite of their biological inspiration and performance achievements, these systems are quite different from human intelligence in many important ways. Brenden et al. [2], have reviewed the progress in cognitive science, and have suggested that truly human-like learning and thinking machines will have to surpass the present engineering trends in their learning, and the manner of learning. As argued by them, these machines must be able to build causal models of the world that support explanation and understanding, and not just solving the pattern recognition problems; and also to understand the intuitive theories of physics and psychology; along with harnessing compositionality and learning to rapidly acquire and generalize knowledge to tackle with new tasks and situations. Brenden et al. [2], have also suggested some concrete challenges and promising routes in order to achieve goals which can combine the strengths of recent neural network advances with more structured cognitive models, so that new advanced systems can be developed. In view of the importance of the subject, a spurt has recently been noticed [1,3-12] in such studies.

Predictive Neural Networks

The present neural networks have a major limitation that they do not have an important feature of human brains, namely predictive power, by which the human brain works constantly by making the predictions, and the predictive coding. Our prediction helps us in understanding the world, by our cognitive abilities, clearly linked to our attention mechanism in the brain, which is in fact our innate ability in the form of sensory inputs, helping us in focusing on each aspect e.g. sensing of any threat and also avoiding it.

Thus, it is clear that it is necessary to develop predictive neural networks in order to interact with the real world, and also for acting while facing a complex situation or environment.

Limitations of Current Neural Networks and their Solutions

As discussed above, the limitation of the present neural networks is that they are not capable of predicting, reasoning about the content, and also suffer from temporal instabilities.

One of the solutions of this problem is provided by the Neural Network Capsules e.g. operation on video frames, which is done by making capsules for routing look at the recent multiple data-points, which in fact is just an associative memory on the most important data points in the recent past. It is important to note that these are the top most recent different representations with different content e.g. those obtained by saving only the representations differing by more than a predefined value.

The second solution is provided by predictive neural network abilities, which in fact is the dynamic routing, forcing each layer to predict the next layer representation. This has proved to be a very powerful self-learning technique, which in fact seems to be more efficient than all other types of unsupervised representation learning, developed and reported in the literature. Research is now going on developing Capsules able to predict long term temporal relationships.

Another important approach is that of continuous learning, since the neural networks require to continuously learning new data points. It has to be noted that the current neural networks are not capable of learning new data, without undergoing ab-initio retraining. The new neural networks have to be developed, which have the ability for self-assessment of the need for new training and also having the knowledge that they do know something. It is quite clear that they will have to perform the real-life and reinforcement learning tasks, for teaching machines, which implies the art of doing new tasks without forgetting the previous ones.

Transfer learning has also proved to be a useful technique for the solution, dealing with the method used by these algorithms to learn themselves by watching videos, as we do for learning of a certain type of cooking, which in fact is an ability requiring all the components important for reinforcement learning. In this way, we can train our machine to do as desired by us just by giving a relevant example, as we do with humans beings; which clearly requires stupendous effort along with very high IQ, and high degree of patience.

Reinforcement learning is the latest neural network research, teaching machines to learn how to act in an environment, and situation in the real world. Obviously, this requires self-learning, and is hence employed for continuous learning, and developing predictive power, and many other related things. It can be viewed as just a minor training on the top of a plastic synthetic brain. However, the problem is of getting a generic brain capable of solving all the problems, and thus is a unique problem of each depending on the other, and hence rendering the problem very complicated indeed. This explains as to why at present, reinforcement learning problems are solved one by one, by using the standard neural networks. Actually, the aim of AI is to develop Machine that can operate and work like the normal humans. Now, the recurrent neural networks (RNNs) are not considered good, especially in case of parallelizing for training, in addition to being slow even on special custom machines, because of their very high memory bandwidth usage. Thus, they are memory-bandwidth-bound. It has to be noted that the next wave of neural network advancements, will be developed having a combination of associative memories and attention, which will be able to learn sequences as well as RNNs.

In addition, some important work is going on the Localization of information in categorization neural networks. These networks are able to localize and detect key-points in images and video extensively. It is expected that these will be embedded in future neural network architecture.

Now the work on Hardware for deep learning is in progress, at a brisk pace. As is well known, the cheap image sensors in every phone have allowed the collection of huge datasets, because of being helped by social media. Also, the GPUs have led to the acceleration in the training of deep neural networks. Many companies including Bitmain, Cambricon, Cerebras, DeePhi, Google, Graphcore, and Huawei, are developing custom high-performance micro-chips, capable of training and running deep neural networks. They are trying to provide the lowest power and the highest measured performance, while computing recent useful neural networks operations. It is now being realized that the hardware can really change machine learning, neural networks and AI. Also, interest is being shown in understanding the theory and development of micro-chips.

It has now been well understood and realized that the hardware, which can provide training faster and at lower power is of great importance in the field of AI, since it will help in creating and testing new models and applications faster. However, the real significant step in this direction is the development of hardware for applications, especially in inference. In fact, many applications are not possible or practical due to the unavailability of efficient hardware, though the relevant software, which is available, is inefficient. We know that our phones can be speech-based assistants, and that our home assistants are dependent on the power supply tied to the power supplies, and having multiple microphones or devices around our home. One of the aims in this direction is removal of phone screen and embedding it into our visual system, which will be possible with a super-efficient hardware.

Already categorizing images and videos is possible in many cloud services. Work is in progress in doing the same in smart camera feeds. Neural nets hardware is expected to remove the cloud, and process more and more data locally in an efficient manner.

Another important application is that of speech-based assistants, which are becoming very important in our lives, as they are able to play music and control basic devices in our smart homes. In addition, small devices we can talk to are bringing a revolution.

Finally, research work is also going on the real artificial assistants, who in addition to having great voices are also able to see like us, and analyse our environment, as we move around. The next step is the real AI assistant with whom we can fall in love. This is also possible, and many smart start-ups like Alpoly already have developed this software.

Scientific management is an approach to management based on the principles of engineering, which focuses on the incentives and other practices empirically shown to improve productivity. Chopra [13-21], has described and technically discussed various techniques being followed by the managers for improving the performance and profit of the organization. Simulation techniques have been becoming very popular for scientific management. Though, different types of software are available from the commercial firms, still some of the managers having computer programming skills find it handy to use their own simulation techniques. Two types of simulation techniques: (i) Simulation using Quantum computing with spins based on qubits, and (ii) Simulation for optimization in Organization are commonly employed. The first one with spins based on qubits, is the concept of applied mathematics, and is a bit difficult to use. The second one is easy to use, and is more commonly employed by the managers, especially for applications in commerce.

Both these techniques are very briefly discussed below:

Theory of Simulation using Quantum Computing with Spins based on Qubits

For understanding the complicated concepts of AI, it is important to understand the Theory of Quantum computing (important for simulation studies) with spins based on qubits, which is the concept of applied mathematics. In quantum computing, logical operations on individual spins are performed using externally applied electric fields, and spin measurements are made using currents of spin-polarized electrons. Interestingly, the realization of such a computer is dependent on future refinements of conventional silicon electronics. The quantum computing is done by using some architecture for Semiconductor implementations. The pioneering work done on this topic is quite important and relevant still at present. The commonly used architectures are given below:

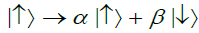

Quantum computing with electron/nuclear spins is done by using an ideal qubit given by:

(1),

(1),

In this ideal qubit, the spin in the upward direction is taken as zero, and that in the downward direction is taken as unity. It is to be understood that in quantum computing and specifically the quantum circuit model of computation, a quantum gate (or quantum logic gate) is a basic quantum circuit operating on a small number of qubits, which are the building blocks of quantum circuits. However, unlike many classical logic gates, quantum logic gates are reversible. The interaction is studied in terms of two types of gates represented by:

1-qubit gate, which functions for Spin rotation, and is given by:

(2),

(2),

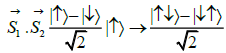

2-qubit gate, which functions for Exchange interaction, is given by:

(3),

(3),

where Si.Sj is the spin interaction, in the form of an exchange interaction between first and second sites.

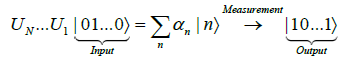

Finally, Quantum algorithms for doing the functions of Factoring and searching are represented by:

(4),

(4),

where, U is the controlled U-gate, represented as: It can be understood that

the input in the form of the first term on the LHS of this Eqn. is equal to the middle term, which on measurement gives the output in the form of the term on the LHS of

this Eqn. Also, |u is an eigenstate of U, |1

is an eigenstate of U, |1 being the component in the first qubit.

being the component in the first qubit.

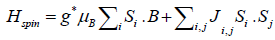

Two Spins in Two Quantum Dots: Quantum Gates

The universal set of quantum gates are formed by the total effect of Heisenberg interaction and the local magnetic field .The Heisenberg Hamiltonian, Hspin is based on the Heisenberg model, and is given by:

(5),

(5),

where J is the coupling constant for a 1-dimensional model consisting of N

dipoles, g is the g-factor, defined as the unit-less proportionality factor relating the

system's angular momentum to the intrinsic magnetic moment; being unity in

classical physics, μB is the magnetic dipole moment,  is the magnetic

field experienced by the nuclei, i and j refer to the sites on a lattice, Si are spin

operators which live on the lattice sites, Si.Sj is the spin interaction, in the form of an

exchange interaction between i’th and i’th sites, and Ji,j are called exchange

constants. It is important to note that S is an integer or half-integer.

is the magnetic

field experienced by the nuclei, i and j refer to the sites on a lattice, Si are spin

operators which live on the lattice sites, Si.Sj is the spin interaction, in the form of an

exchange interaction between i’th and i’th sites, and Ji,j are called exchange

constants. It is important to note that S is an integer or half-integer.

(6),

(6),

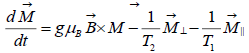

The quantum gates are of the form: It has also to be noted that the Bloch’s equation is used to study (i) the total effect of Spin-orbit and phonons, (ii) the total effect of Hyperfine and phonons, (iii) the total effect of Spin-orbit and photons. This Eqn. is given by:

(7),

(7),

where  is the rate of change of nuclear magnetization

is the rate of change of nuclear magnetization  is the transverse nuclear magnetization,

is the transverse nuclear magnetization, is the longitudinal nuclear magnetization, T1 and T2 are respectively longitudinal and transverse relaxations. This Eqn. is also

used to study the spectral diffusion, which is nothing but the summation of nuclear

spins, and time dependent magnetic fields. However, the case of unresolved

hyperfine structure is quite complicated to study. Also, many other factors like

different g-factors, inhomogeneous fields, and dipolar/exchange between unlike

spins pose problems in analyzing such cases.

is the longitudinal nuclear magnetization, T1 and T2 are respectively longitudinal and transverse relaxations. This Eqn. is also

used to study the spectral diffusion, which is nothing but the summation of nuclear

spins, and time dependent magnetic fields. However, the case of unresolved

hyperfine structure is quite complicated to study. Also, many other factors like

different g-factors, inhomogeneous fields, and dipolar/exchange between unlike

spins pose problems in analyzing such cases.

Simulation for optimization in organization

It is interesting to note that simulation is used not to just focus on declarative learning. It has more important purpose for base level learning, for which Bi is most important and commonly used formula of cognitive architecture ACT-R. Following the approach [22,23], the Equation for an estimation of the odds of all presentations of a group, that is being used is given as:

(8),

(8),

where Tj denotes the time-difference (Tj=Tnow-Tpresentation) when the group is represented in memory, d denotes the decay rate, and β denotes the initial activation. It is to be noted that the base-level learning defines a logarithmic power function, which approximates the Power Law of Forgetting, when no chunks are presented and the Power Law of Learning when many consecutive chunks are presented. The manager of the organization has to note that the parameters of the base-level learning equation can assign different rates of degradation of each individual or each group, and thus allows the individual to forget when no representations of a group take place, and also enables it to remember when representations take place.

As is expected, the declarative groups are selected on the basis of matching and highest activation, selection being based on the expected gain or utility. If the Manager is involved in solving a goal, the individuals can be asked to solve the problem. But, as ACT-R is a serial processor, the procedure has no choice other than selecting one with the highest expected gain or utility. In this approach, the utility of a procedure is defined as:

U=P*G-C+σ (9),

where, P denotes learning probability, G denotes the goal value i.e. the importance attached by ACT-R to achieve a particular goal, C denotes the cost of using the procedures, and σ denotes the stochastic noise variable. It is clear that it is possible to assign a different G to each procedure, which influences the ACT-R engine for choosing a specific procedure. However, in general, it is customary to assign the same G to all procedures in most of the ACT-R simulations. In fact, the probability P of learning is a factor of two sub-probabilities: q, the probability of the successful procedure, and r, the probability of fulfilling the objective of the goal in case the procedure is successful. P is Probability of Learning Equation and defined as:

P=q*r (10)

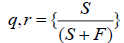

In this Equation, Cost C denotes the amount of time required for the completion of the task with the procedure. It may be noted that the noise has to be added to the utility for creating the non-deterministic behavior, and not with deterministic behavior, in which case, the simulations of ACT-R are not in a position to follow any other procedure having similar parameters .It is important to understand the procedure for learning parameters q and r, since they have strong impact on the behavior in the simulation procedure. Another important aspect of learning connected with the procedure is that it is of two types: (i) procedural symbolic learning, and (ii) procedural sub-symbolic learning. In the first type, ACT-R distinguishes between specialization and generalization, in the sense that the specialization is used for often recurring problems and becoming routine and generalized, which is used in analogy problems. Surprisingly, ACT-R has no stable and clear solution for the problem of this type of procedural learning. In fact, the procedural sub-symbolic learning is more prominently used on such studies. In this technique, the parameters of probability-q and r describe the success ratio of the use of the procedure, and reflect the frequency and occurrence of successes and failures of a production. Obviously, the parameter q is the success-ratio of directly completing the procedure; which in fact keeps record of the most recent successful execution of the condition and action aspects of a procedure. The parameter r gives the computed success-ratio in the achievements of completing an objective of a goal, after solving all the sub goals and after achieving and popping the current goal level.

In other words, all goals that follow the current goal have to be fulfilled successfully to accomplish the current goal. The learning probability is defined as:

(11),

(11),

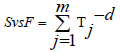

which makes it clear that in the beginning , either S(Successes) >=1 or F=>1. By default initialization of the procedural parameters, S is given a value of 1 and F (Failures) a value of 0, which is based on the optimistic view of the success of the procedure being followed. It has to be carefully noted that so far, this simulation treatment has not considered any time component, which implies that the events and efforts in the past are equally weighted, as in case of the presently experienced cases. However, in general the organizers are observed to be more aware of the impact of the present events and experiences than those of the past. Therefore, ACT-R is modified by using the functions to discount the impact of the past experiences by incorporating a power-decaying function, which is similar to the baselevel learning equation. The modified formula for discounting successes and failures is given by:

(12),

(12),

Where m denotes the number of successes or failures, Tj denotes the time difference, and d denotes the success decay rate. The organizer uses this formula to give different decay rates for successes and failures. Hence, the manager has to improve the performance of the organization by optimizing various parameters, for which his experience is very important. For this work, his experience of interaction with the employees in the past, and his understanding of the market trends play an important role. In some complicated cases, use of software is also required, which is now commercially available.

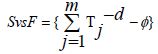

However, it is just possible that in certain cases, a sudden unforeseen event may take place, which may affect this situation. Eqn. 11 may be then modified as given below:

(13),

(13),

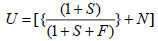

Where∅ denotes the effect of the sudden unforeseen event. It is important to note that in certain cases, it is possible to extend this analysis by including the individual experiences and memory of past experiences, and using a simple form of learning, based on the utility (U) function of ACT-R given as:

(14),

(14),

where each employee remembers his present score and adapts his strategy in the firm from time to time. It is obvious that at T=0, the individual has no preferences and hence makes the selection based on his experience or even randomly. However, in the ACT-R simulation, the utility computation is quite complicated, and Noise (N) has to be added to compensate for the variance in decision making, and is given as:

(15),

(15),

Also, in the simulation experiment, has to be noted that the individuals are provided with an equal personal construct, which means that the initialization parameters of the employees are equal; implying that they have equal decay rates, equal utility-preferences, equal motivation value to solve the goals, and equal procedural, declarative memory and noise. However, if they have different values of these parameters, then the weighted average is taken in the computation. The weight implies the importance of each parameter in the final computation; and has to be taken into account to reach correct value.

With the growth of the Corporate World, and the increase in the related complexities, novel advanced techniques are being applied to overcome them in order to smoothly running the companies under the managers. Some of them are briefly discussed below:

Digital maturity

One of the latest important features of Advances in Management Techniques is the digitalization of Business. It has been reported in the literature that digitally maturing companies have been able to achieve bigger successes by (i) increasing collaboration, (ii) scaling innovation, and (iii) revamping their existing approach to talent.

Digital Transformation

Another interesting feature of such advancements in Management techniques has been discussed in a blog posted by Gerald C. Kane, a professor of information systems at the Carroll School of Management at Boston College and guest editor for MIT Sloan Management Review’s Digital Business Initiative, who argues that it is helpful to think of digital transformation in the form of continual adaptation to a constantly changing environment.

Appropriate Method of Reacting to the Negative Emotions at the Work Place

It is quite commonly observed that many managers tend to ignore and lend a blind eye to the negative emotions and the related problems at the work place. However, as emphasized by the management expert - Christine M. Pearson, such tendencies are counterproductive and in the long term, go against the interest of the company. The intelligent managers should take keen interest in observing the negative emotions of the work force under them, and then strive hard to remove these emotions by taking them into confidence, and giving suitable suggestions, and thus enabling them to be tension free at the work place.

Some unusual management skills are such that they are more powerful than the chosen discipline/method of clearly articulating the problem, for which the managers try to find solution before taking any action. Such an approach is clearly very useful for solving some complicated corporate problems. It is interesting to note that AI is especially useful in such situations.

Role of Digital Matrix in Life on Finishing the Commonly Known Corporate Culture

It has now been well realized that digital matrix in life has an important role in finishing the commonly known corporate culture, and it has also been emphasized by the expert in this field - Paul Michelman. It is argued that while working in corporate, and looking ahead to life in the digital matrix, it is felt that the culture’s role is quite redundant.

It is now understood that to reach the full potential of the company, the popular innovation methodology must be closely aligned with the realities and social dynamics of the established businesses.

Transformation of Strategy into Results

During discussions in various symposia on management, it has been realized that the management leaders have to perform the complicated duty of translating the complexity of strategy into very simple and flexible guidelines, so that they can be executed to fetch the desired results. The AI can be quite usefully employed in such situations (Turning Strategy into Results).

Dilemma Faced by a Company with a Digital Strategy

The companies with digital strategy have to encounter a difficult situation of choosing between digital and transformation, while working on the digital economy. It has been discussed by George Westerman of the MIT (on Initiative on the Digital Economy), that the managers have to go forego digital and go for transformation.

Addressing Sustainability

It is very important to develop workable and profitable sustainability strategies for reducing the impact on the global environment. This fact has been emphasized in the final report of an eight-year initiative for studying the methodologies employed by the corporations for addressing sustainability (MIT Sloan Management Review and the Boston Consulting Group). As explained in this report, we can do it by incorporating few key lessons, including the following:

Steps Required for Reducing the Uncertainty and Instability

It has now been established that in the uncertain and unstable times, commonly faced these days, the corporate executives have, in addition to managing their own companies, to become active for having influence within the broader systems of the corporate.

Improvement of Sustained Success by INNOVATION

It is generally thought that Innovation is more of an art than science. However, it is now increasingly been realized that the sustained success can be improved by making use of the latest innovations after understanding their secrets and hidden advantages. This point had been explained very clearly (Harnessing the Secret Structure of Innovation).

Understanding the Structure of Digital Transformation

It is right that the innovation of digital transformation is useful for organizations. However, according to Professor Stephen J. Andriole of Villanova University, a leading expert in the field, the first step is understanding the realities of digital transformation before going for the technology transformation of the organization. There are many articles on various innovation insights, and many researchers have presented and discussed their findings about innovation in MIT Sloan Management Review.

Profit Making from Data Deluge

Nowadays, nearly all companies are awash in data. However, as pointed out by the experts in this field, it is possible to figure out as to how to derive a profit from the data deluge, and this in fact helps in distinguishing the company in the marketplace (How to Monetize Your Data). Recently, a paper has appeared, in which emphasis has been laid on adding artificial intelligence into the organization, as it is an extremely important topic, which is drawing the attention for the benefit of the business executives. This paper is very useful and gives a lot of information, along with providing the SAS approach to AI. In addition, this paper explains the key concepts, and also gives the process and implementation tips for using the AI technologies for the business and analytical strategies. It is now well established that the SAS is the leader in analytics, which empowers and inspires the customers around the globe to transform data into intelligence through its innovative software and services.

It has also been discussed that the Equation of intelligence is given as:

E=mc2

This equation is called as Alex Wissner-Gross's equation for intelligence which is essentially an Entropic force, where E can be calculated as the mass m multiplied by the speed of light (c)=about 3×108 m/s. squared. This is a sort of equation, in which artificial intelligence provides the physical basis for intelligence, and this equation [24] tells that every system behaves in an intelligent manner given by;

F=T ∇ ST (17),

Where, S is related to the entropy (In statistical mechanics, entropy is an extensive property of a thermodynamic system, which is closely related to the number Ω of microscopic configurations known as microstates, which are consistent with the macroscopic quantities that characterize the system :such as its volume, pressure and temperature) of the system, T is a notional temperature, the symbol ∇ is called as gradient, and the equation roughly states that there is force in the direction of increasing entropy, which is called as the causal entropic force, which in fact is a parameter equal to intelligence.

In view of the tremendous drive being shown by the researchers in the fields of artificial intelligence and management, it is quite natural that some attempts have been made in combining these two, and thus leading to an entirely new and evolving field - Application of artificial intelligence in management. In addition, new advances are being made in developing neural networks, quantum computation, and simulation techniques; and therefore, new important achievements are expected in near future in these fascinating and important fields. With the involvement of the ideas of Physics and Mathematics (e.g. Intelligence Equation in which concepts of gradient from mathematics; and force, notional temperature, and entropy from physics are involved), in this topic, it can be concluded that the field is evolving at a very brisk pace, and is bound to show its utility in practically all spheres of Management.

Copyright © 2025 Research and Reviews, All Rights Reserved